Stateful vs Stateless AI Agents: Which Architecture Wins? [2026]

Stateful agents remember across turns. Stateless agents scale infinitely. Most teams pick wrong. Here's the decision framework with code examples, production trade-offs, and the hybrid pattern nobody talks about.

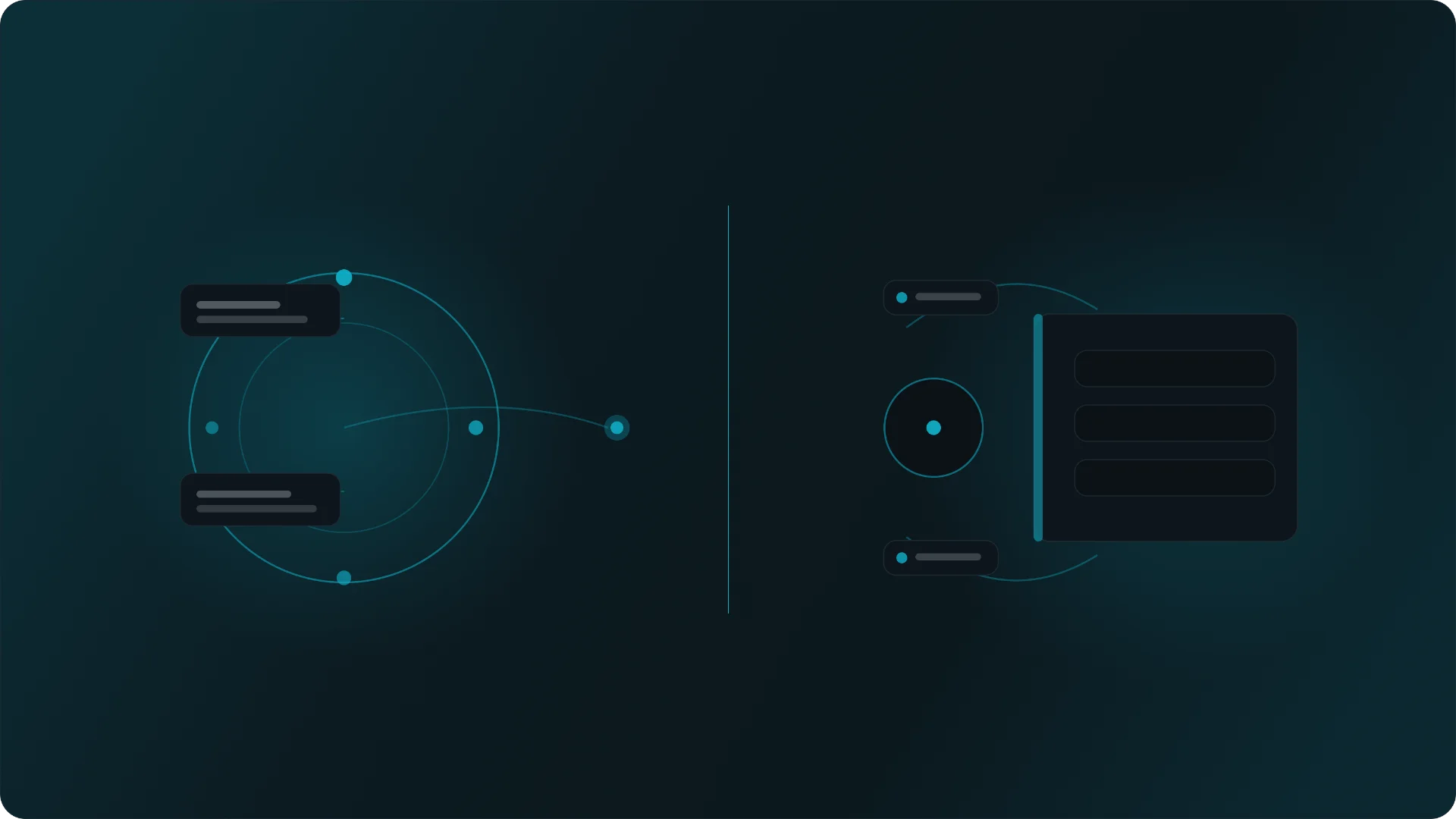

Quick Answer: Stateful vs Stateless AI Agents in One Look

When building AI agents, the distinction between stateful and stateless comes down to one thing: where does memory live between requests? Most LLM APIs—GPT-5 Claude, Llama, and others—are stateless by default. They do not remember anything between API calls unless you explicitly pass context back in. What looks like "chat memory" in OpenAI's SDK is actually client-side state that your code sends with each request.

A stateless AI agent handles every request as a standalone transaction: input → prompt → model → output. There is no database call, no session lookup, no persisted memory. All context must be embedded directly in the prompt. A stateful AI agent, by contrast, reads prior state from an external store (in-memory dict, Redis, Postgres, etc.) before constructing the prompt, then writes updated state back after the model responds. The agent "remembers" because you made it remember.

Here's the difference at a glance:

- Memory: Stateless agents have none between calls; stateful agents persist history, preferences, or workflow progress externally.

- Implementation complexity: Stateless is a simple function handler; stateful requires schema design, serialization, and consistency handling.

- Scalability: Stateless scales horizontally with simple load balancers; stateful may need sticky sessions or partitioned stores.

- Typical use cases: Stateless for one-shot tools (classification, code explanation); stateful for multi-step workflows and conversational assistants.

If you're skimming this article: stateless is simpler but forgets everything; stateful remembers but costs you in complexity. The rest of this guide shows you exactly how to implement each.

What Is an AI Agent?

An AI agent is a system that takes goals and inputs, calls models or tools, and produces actions or responses. Agents built in 2024-2025 typically wrap LLM calls with code that handles tool invocation, state management, and control flow. They orchestrate multiple steps, make decisions dynamically, and can interact with external APIs.

This article focuses specifically on how agents handle state—conversation history, user preferences, session data, and workflow progress—rather than general agent theory. State can belong to different entities depending on your design:

- user_id: Long-term personalization and preferences.

- conversation_id or session_id: A specific chat thread.

- workflow_id or job_id: Multi-step background pipelines.

- document_id: Processing state for a specific file or record.

What Is a Stateless AI Agent?

A stateless AI agent handles each request independently, never reading or writing persistent state. Any "memory" must be re-sent with every request. The model itself—whether OpenAI, Anthropic, or a local LLM—is inherently stateless. When you see chat history in SDKs, that's client-side state your code passes back in.

The basic stateless flow works like this:

- Client sends a request with current input.

- Service builds a prompt from that input alone.

- Service calls the model API.

- Response returns immediately; nothing is saved.

Stateless agents excel in scenarios where all necessary context is included in the input, such as classification APIs, one-shot question answering, code explanation endpoints, and image classification or spam detection systems.

Advantages of stateless agents include easier testing, scalability, and caching. However, they cannot remember previous interactions without embedding full history in the prompt, which leads to challenges with token limits and latency.

What Is a Stateful AI Agent?

A stateful AI agent loads prior state for a given key (user_id, session_id, workflow_id), uses it to construct the prompt or tool calls, then updates and persists new state after each step. The agent maintains continuity because you explicitly store and retrieve context.

What counts as "state" in an AI agent:

Conversation history or summaries: Past messages that inform current responses.

User profile and preferences: Settings like "prefers concise answers."

Intermediate workflow results: Parsed documents, previous tool outputs.

Long-running task progress: Steps completed in a multi-step job.

The generic stateful flow works like this:

1. Receive request with entity key.

2. Load state from store.

3. Combine stored context and new input to build the prompt.

4. Call model and any tools.

5. Compute updated state based on response.

6. Write state back to store.

Stateful agents are necessary for multi-step workflows, personalized assistants, and systems that must resume after failures. They reduce token usage by storing context externally, improving efficiency for long conversations.

Stateful vs Stateless AI Agents: Side-by-Side Comparison

Most "chatbots with memory" are actually stateful agents under the hood. The server stores past messages or summaries and feeds them back into an otherwise stateless model.

| Aspect | Stateless Agents | Stateful Agents |

|---|---|---|

| Memory handling | No persistence; all context passed in each request | Explicit persistent store keyed by entity ID |

| Implementation complexity | Simple request handler; no storage logic | Requires state schema, serialization, consistency handling |

| Latency | Single model call; sub-second responses typical | Model plus storage reads/writes; adds latency |

| Failure modes | Prompt overflow, token limit hits | State corruption, stale reads, race conditions |

| Scalability | Easy horizontal scaling; any server handles any request | May need sticky sessions or sharded stores |

| Typical use cases | One-shot tools, classification, API endpoints | Conversational assistants, multi-step workflows |

| Token economics | Full history resent each time; costs grow linearly | Saves tokens by storing context externally |

| Infrastructure cost | Lower; no storage layer needed | Higher due to storage but saves token costs |

Example: Stateless Agent in Practice

Consider an internal code assistant endpoint that explains code snippets without memory:

1. Developer sends { code_snippet, question }.

2. Service constructs a prompt with the snippet inline.

3. Service calls the LLM.

4. Response is returned; no state is saved.

This works well because the input size is bounded and all context fits in one prompt. Stateless agents offer predictable resource utilization and simpler deployment.

How Developers Fake Memory in Stateless Agents

Developers often accumulate conversation history on the client and send the full history with every request ("prompt stuffing"). This approach has drawbacks:

Token costs grow linearly with conversation length.

Context window limits cause truncation of older messages.

Latency increases as prompts grow larger.

Stateless agents are best suited when continuity is not required.

Example: Stateful AI Agent in Practice

Consider a support ticket triage agent handling multi-step reasoning:

1. Receives new ticket with user_id and text.

2. Loads user state from Redis or DB.

3. Calls LLM with ticket text plus retrieved context.

4. Decides next action using conditional logic.

5. Updates and persists state.

This design enables pausing and resuming tasks, multi-agent collaboration, and personalized interactions.

Common Failure Modes in Stateful Agents

Stale state: Parallel requests overwrite each other's updates.

Partial updates: Incomplete state writes cause lost progress.

Race conditions: Concurrent writes lead to inconsistent state.

Prompt drift: Summaries diverge from stored facts.

Losing state across retries: Retries fail to reload or save state properly.

Avoid these by implementing versioning, atomic updates, concurrency controls, and treating stored state as the source of truth.

Where State Should Live

In-memory: Fast but ephemeral; not suitable for production.

Session stores (Redis, Memcached): Good for short-lived conversational state.

Databases (Postgres, DynamoDB): Durable, queryable state for long-term storage.

Vector databases: Semantic recall for documents or memories.

Event logs: Append-only stores for auditing and replay.

Avoid storing state solely in prompts, ad-hoc files, or client local storage without encryption.

When to Use Stateless vs Stateful Agents

Choose stateless agents when:

Tasks are one-shot and context fits in a single prompt.

High-throughput, low-latency APIs are needed.

Privacy constraints prevent storing user data.

Choose stateful agents when:

Conversational assistants require continuity and personalization.

Multi-step workflows or resumable jobs demand persistent context.

Multiple agents collaborate and share state.

Hybrid patterns:

Stateless frontends relay to stateful orchestrators.

Stateless tools plug into stateful supervisors managing workflows.

Separate compute and state services for scalability.

Key Takeaways for Designing AI Agent State

LLMs are stateless by default; stateful behavior requires explicit state storage.

Stateless agents are simpler and resource-efficient but lack continuity.

Stateful agents enable complex reasoning and personalization but add complexity.

Treat stored state as the source of truth, not the prompt.

Design schemas and utilities for safe state updates.

Plan for concurrency, schema evolution, and retries.

Use robust storage layers instead of in-memory or prompt-only memory.

Stateful agents improve user experience by recalling prior context and enabling continuous improvement.

Additional Considerations: Memory Management and Context Window

A crucial aspect of building stateful AI agents is effective memory management, which includes context window management and persistent memory design. Large language models (LLMs) have a limited context window, meaning they can only process a fixed amount of text tokens at once. This limited context window requires careful selection and summarization of stored data to retain user data that is most relevant for ongoing tasks.

Stateful agents must manage session data efficiently, balancing between short-term immediate context and long-term historical data. Techniques like summarization, pruning, and knowledge graphs help maintain data consistency and reduce compute costs while ensuring the system responds accurately to user inputs. Without proper memory management, stateful agents risk prompt drift or losing important prior inputs, which can degrade the quality of human-like conversations and context-aware interactions.

Operational Processes and Deployment Challenges

Deploying stateful agents involves additional operational overhead compared to stateless agents. The need for persistent storage and state persistence introduces maintenance overhead and potential security concerns, such as protecting sensitive data stored in databases or caches. Organizations must design robust operational processes to handle state synchronization, concurrency control, and recovery from failures.

Moreover, stateful workloads require more computational resources and careful resource efficiency planning to avoid excessive compute costs. Despite these challenges, the benefits of stateful components—such as enabling autonomous operation, multi-agent collaboration, and dynamic branching logic—make them indispensable for complex tasks and virtual assistants that demand contextual understanding and continuity.

Future Outlook: Hybrid and Distributed Systems

Many modern AI systems combine stateful and stateless agents to leverage the strengths of both architectures. Hybrid systems use stateless agents for lightweight, high-speed tasks and stateful components for workflows requiring persistent memory and context awareness. In distributed systems, managing data consistency and state persistence across multiple agents becomes critical to maintain operational efficiency and provide seamless user experiences.

As AI systems evolve, frameworks and orchestration tools continue to improve memory management and state handling, enabling developers to build more sophisticated, context-aware, and human-like AI agents that can recall prior inputs, learn from user feedback, and adapt dynamically over time.

Tacnode Context Lake plays a pivotal role in this evolution by offering a unified, cloud-native platform designed to support stateful AI agents with real-time ingestion, query, and analytics capabilities. Tacnode Context Lake consolidates transactional databases, data warehouses, vector stores, and stream processors into a single system, providing low-latency retrieval and elastic scaling that are essential for managing persistent memory and maintaining context across user sessions. Enterprises leveraging Tacnode Context Lake benefit from seamless integration with PostgreSQL-compatible tools and AI-native architectures, enabling efficient state management and enhanced operational processes.

For more details on how Tacnode Context Lake empowers AI applications, see our Architecture Overview and explore the Context Lake Overview that make stateful and hybrid AI agent deployments scalable and reliable.

Written by Boyd Stowe

Building the infrastructure layer for AI-native applications. We write about Decision Coherence, Tacnode Context Lake, and the future of data systems.

View all postsContinue Reading

AI Agent Memory Architecture: The Three Layers Production Systems Need

OpenClaw and the Context Gap: What Personal AI Agents Need Next

Semantic Operators: Run LLM Queries Directly in SQL

Ready to see Tacnode Context Lake in action?

Book a demo and discover how Tacnode can power your AI-native applications.

Book a Demo